hadoop1的核心組成是兩部分,即HDFS和MapReduce。在hadoop2中變為HDFS和Yarn。新的HDFS中的NameNode不再是只有一個了,可以有多個(目前只支持2個)。每一個都有相同的職能。

兩個NameNode

當集群運行時,只有active狀態的NameNode是正常工作的,standby狀態的NameNode是處於待命狀態的,時刻同步active狀態NameNode的數據。一旦active狀態的NameNode不能工作,通過手工或者自動切換,standby狀態的NameNode就可以轉變為active狀態的,就可以繼續工作了。這就是高可靠。

NameNode發生故障時

2個NameNode的數據其實是實時共享的。新HDFS采用了一種共享機制,JournalNode集群或者NFS進行共享。NFS是操作系統層面的,JournalNode是hadoop層面的,我們這裡使用JournalNode集群進行數據共享。

實現NameNode的自動切換

需要使用ZooKeeper集群進行選擇了。HDFS集群中的兩個NameNode都在ZooKeeper中注冊,當active狀態的NameNode出故障時,ZooKeeper能檢測到這種情況,它就會自動把standby狀態的NameNode切換為active狀態。

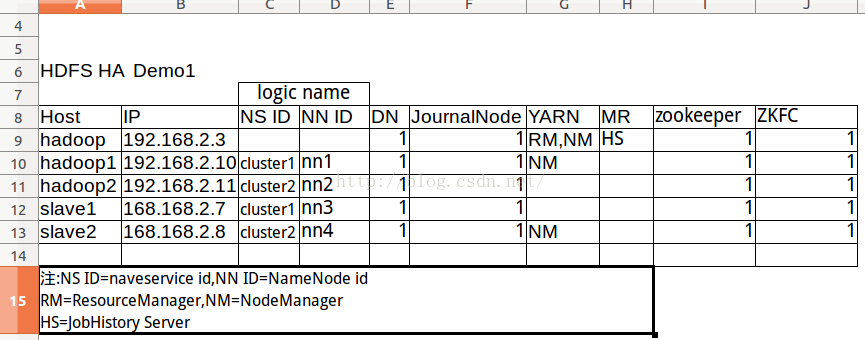

HDFS Federation

NameNode是核心節點,維護著整個HDFS中的元數據信息,那麼其容量是有限的,受制於服務器的內存空間。當NameNode服務器的內存裝不下數據後,那麼HDFS集群就裝不下數據了,壽命也就到頭了。因此其擴展性是受限的。HDFS聯盟指的是有多個HDFS集群同時工作,那麼其容量理論上就不受限了,誇張點說就是無限擴展。

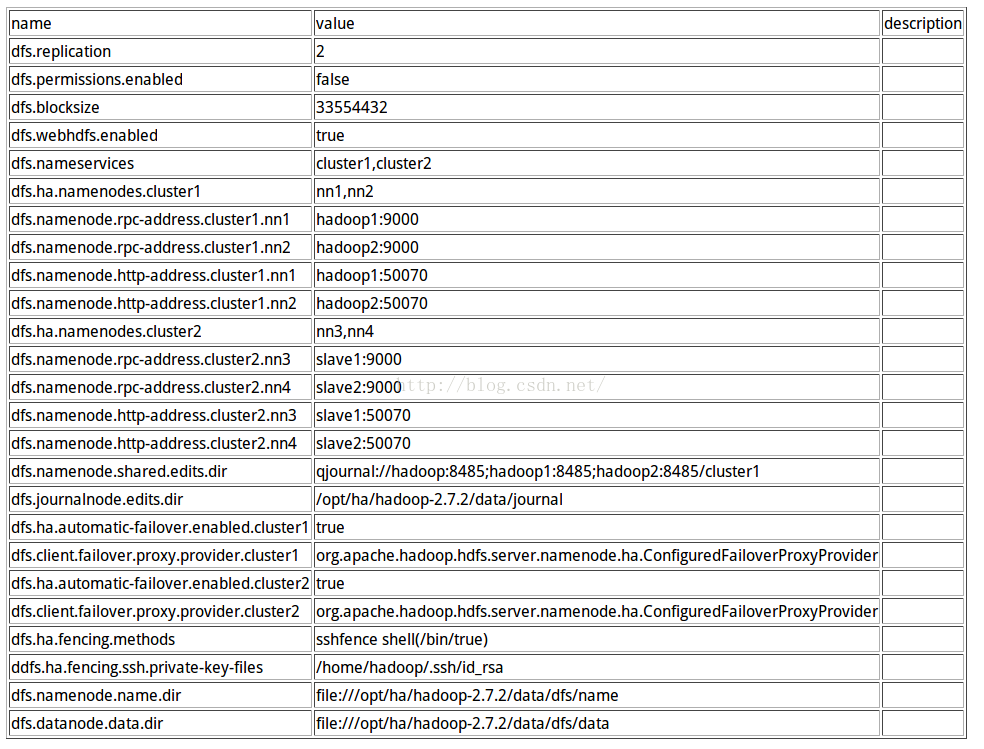

配置文件一共包括6個,分別是hadoop-env.sh、core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml和slaves。除了hdfs-site.xml文件在不同集群配置不同外,其余文件在四個節點的配置是完全一樣的,可以復制。

默認的HDFS路徑。當有多個HDFS集群同時工作時,用戶如果不寫集群名稱,那麼默認使用哪個哪就在這裡指定!該值來自於hdfs-site.xml中的配置

默認是NameNode、DataNode、JournalNode等存放數據的公共目錄

ZooKeeper集群的地址和端口。注意,數量一定是奇數

fs.defaultFS hdfs://cluster1 hadoop.tmp.dir /opt/ha/hadoop-2.7.2/data/tmp io.file.buffer.size 131072 ha.zookeeper.quorum hadoop:2181,hadoop1:2181,hadoop2:2181;slave1:2181;slave2:2181

yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.aux-services.mapreduce.shuffle.class org.apache.hadoop.mapred.ShuffleHandler yarn.resourcemanager.hostname hadoop yarn.log-aggregation-enable true yarn.log-aggregation.retain-seconds 604800

mapreduce.framework.name yarn mapreduce.job.tracker hdfs://hadoop:9001 true mapreduce.jobhistory.address hadoop:10020 mapreduce.jobhistory.webapp.address hadoop:19888

hadoop hadoop1 hadoop2 slave1 slave2

hadoop@hadoop:hadoop-2.7.2$ zkServer.sh start格式化zookeeper集群

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs zkfc -formatZK [hadoop@slave1 hadoop-2.7.2]$ bin/hdfs zkfc -formatZK [hadoop@slave1 hadoop-2.7.2]$ zkCli.sh [zk: localhost:2181(CONNECTED) 5] ls /hadoop-ha/cluster cluster2 cluster1在所有節點啟動journalnode

hadoop@hadoop:hadoop-2.7.2$ sbin/hadoop-daemon.sh start journalnode starting journalnode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-journalnode-hadoop.out hadoop@hadoop:hadoop-2.7.2$

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs namenode -format -clusterId hadoop1 16/05/19 15:43:01 INFO common.Storage: Storage directory /opt/ha/hadoop-2.7.2/data/dfs/name has been successfully formatted. 16/05/19 15:43:01 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 16/05/19 15:43:01 INFO util.ExitUtil: Exiting with status 0 16/05/19 15:43:01 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop1/192.168.2.10 ************************************************************/ [hadoop@hadoop1 hadoop-2.7.2]$ ls data/dfs/name/current/ fsimage_0000000000000000000 seen_txid fsimage_0000000000000000000.md5 VERSION [hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start namenode starting namenode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-namenode-hadoop1.out [hadoop@hadoop1 hadoop-2.7.2]$ jps 9551 NameNode 9423 JournalNode 9627 Jps 9039 QuorumPeerMain

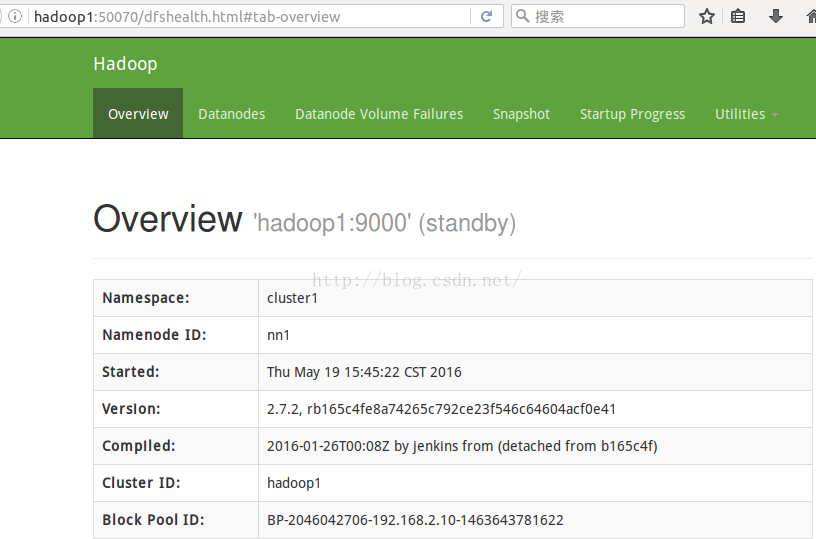

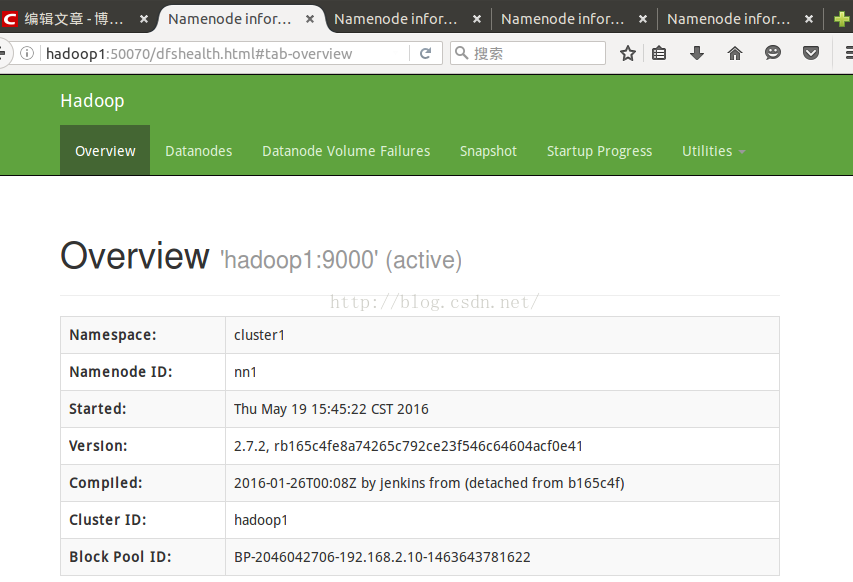

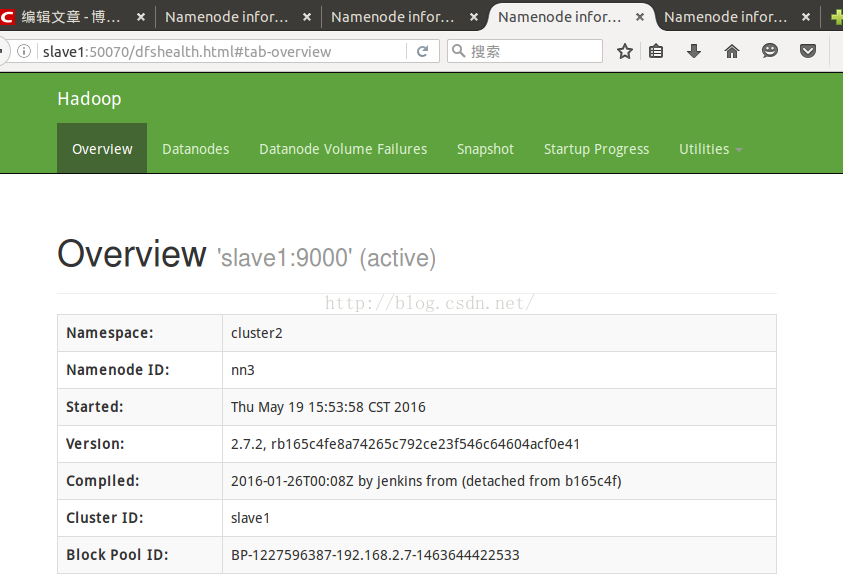

http://hadoop1:50070查看

cluster1中另一個節點同步數據格式化,並啟動

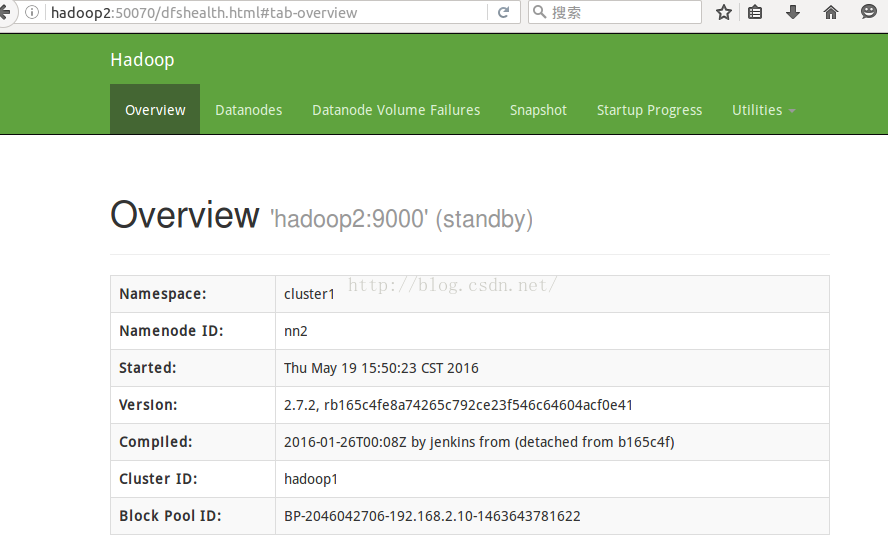

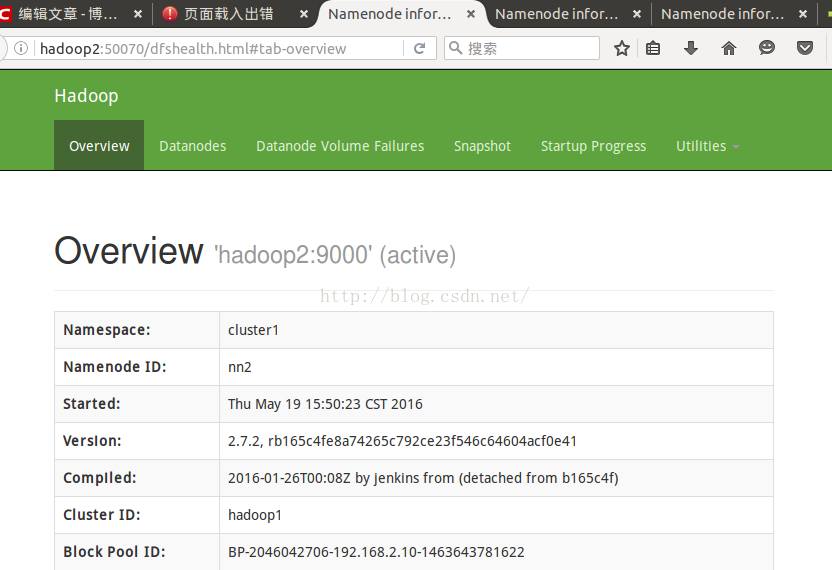

[hadoop@hadoop2 hadoop-2.7.2]$ bin/hdfs namenode -bootstrapStandby ...... 16/05/19 15:48:27 INFO common.Storage: Storage directory /opt/ha/hadoop-2.7.2/data/dfs/name has been successfully formatted. 16/05/19 15:48:27 INFO namenode.TransferFsImage: Opening connection to http://hadoop1:50070/imagetransfer?getimage=1&txid=0&storageInfo=-63:1280767544:0:hadoop1 16/05/19 15:48:28 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds 16/05/19 15:48:28 INFO namenode.TransferFsImage: Transfer took 0.00s at 0.00 KB/s 16/05/19 15:48:28 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 353 bytes. 16/05/19 15:48:28 INFO util.ExitUtil: Exiting with status 0 16/05/19 15:48:28 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop2/192.168.2.11 ************************************************************/ [hadoop@hadoop2 hadoop-2.7.2]$ ls data/dfs/name/current/ fsimage_0000000000000000000 seen_txid fsimage_0000000000000000000.md5 VERSION [hadoop@hadoop2 hadoop-2.7.2]$ [hadoop@hadoop2 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start namenode starting namenode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-namenode-hadoop2.out [hadoop@hadoop2 hadoop-2.7.2]$ jps 7196 Jps 6980 JournalNode 7120 NameNode 6854 QuorumPeerMainhttp://hadoop2:50070查看如下

使用以上步驟同是啟動cluster2的兩個namenode;這裡省略

然後啟動所有的datanode和(必須也在hadoop節點上啟動)yarn

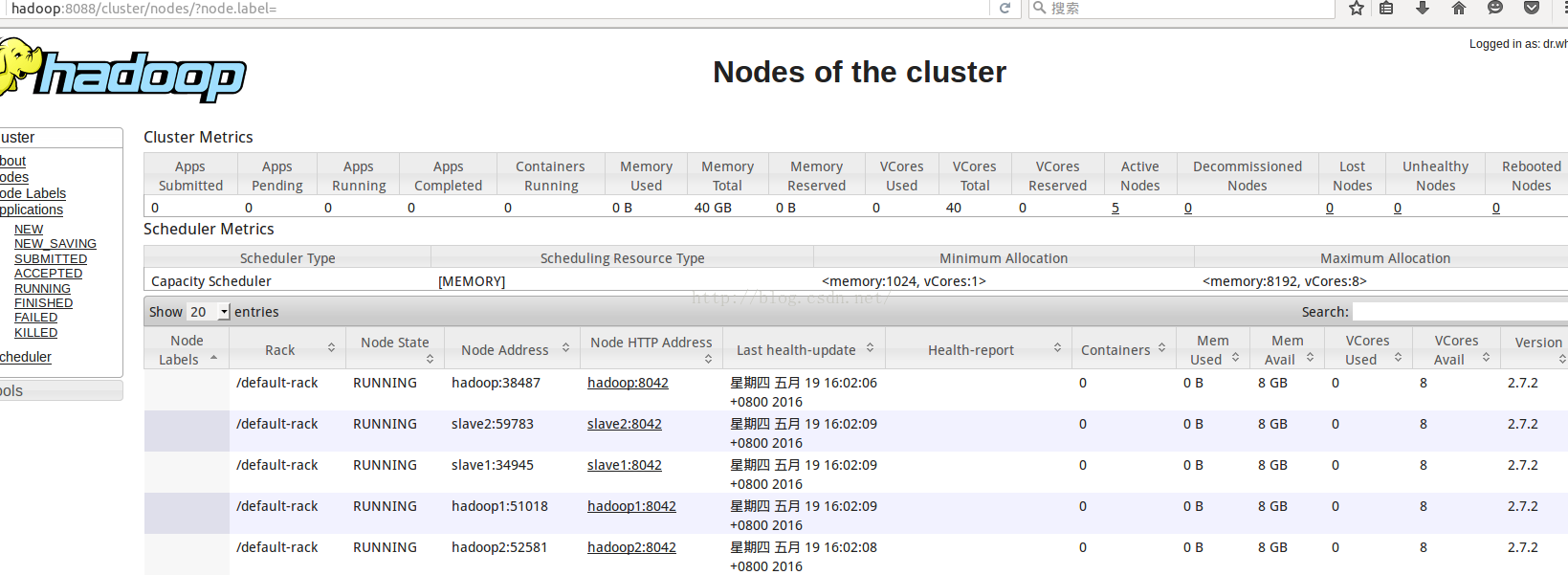

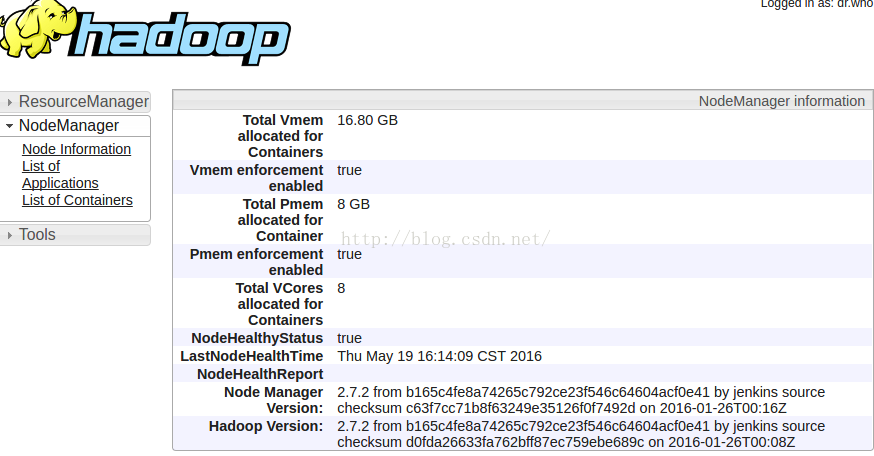

[hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemons.sh start datanode hadoop1: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop1.out slave2: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-slave2.out hadoop2: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop2.out slave1: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-slave1.out hadoop: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop.out hadoop@hadoop:hadoop-2.7.2$ sbin/start-yarn.sh starting yarn daemons starting resourcemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-resourcemanager-hadoop.out hadoop2: starting nodemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-hadoop2.out hadoop1: starting nodemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-hadoop1.out slave2: starting nodemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-slave2.out hadoop: starting nodemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-hadoop.out slave1: starting nodemanager, logging to /opt/ha/hadoop-2.7.2/logs/yarn-hadoop-nodemanager-slave1.out hadoop@hadoop:hadoop-2.7.2$ jps 19384 JournalNode 19013 QuorumPeerMain 20649 Jps 20241 ResourceManager 20396 NodeManager 19815 DataNode [hadoop@hadoop1 hadoop-2.7.2]$ jps 10091 NodeManager 9551 NameNode 9822 DataNode 9423 JournalNode 10232 Jps 9039 QuorumPeerMain [hadoop@hadoop2 hadoop-2.7.2]$ jps 7450 NodeManager 7295 DataNode 6980 JournalNode 7120 NameNode 6854 QuorumPeerMain 7580 Jps [hadoop@slave1 hadoop-2.7.2]$ jps 3706 DataNode 3988 Jps 3374 JournalNode 3591 NameNode 3860 NodeManager 3184 QuorumPeerMain [hadoop@slave2 hadoop-2.7.2]$ jps 3023 QuorumPeerMain 3643 NodeManager 3782 Jps 3177 JournalNode 3497 DataNode 3383 NameNodhttp://hadoop:8088/cluster/nodes/

所有namenode節點啟動zkfc

[hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start zkfc starting zkfc, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-zkfc-hadoop1.out [hadoop@hadoop1 hadoop-2.7.2]$ jps 10665 DFSZKFailoverController 9551 NameNode 9822 DataNode 9423 JournalNode 10739 Jps 9039 QuorumPeerMain 10483 NodeManager

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs dfs -mkdir /test 16/05/19 16:09:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs dfs -put etc/hadoop/*.xml /test 16/05/19 16:09:36 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable #在slave1中查看 [hadoop@slave1 hadoop-2.7.2]$ bin/hdfs dfs -ls -R / 16/05/19 16:11:32 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable drwxr-xr-x - hadoop supergroup 0 2016-05-19 16:09 /test -rw-r--r-- 2 hadoop supergroup 4436 2016-05-19 16:09 /test/capacity-scheduler.xml -rw-r--r-- 2 hadoop supergroup 1185 2016-05-19 16:09 /test/core-site.xml -rw-r--r-- 2 hadoop supergroup 9683 2016-05-19 16:09 /test/hadoop-policy.xml -rw-r--r-- 2 hadoop supergroup 3814 2016-05-19 16:09 /test/hdfs-site.xml -rw-r--r-- 2 hadoop supergroup 620 2016-05-19 16:09 /test/httpfs-site.xml -rw-r--r-- 2 hadoop supergroup 3518 2016-05-19 16:09 /test/kms-acls.xml -rw-r--r-- 2 hadoop supergroup 5511 2016-05-19 16:09 /test/kms-site.xml -rw-r--r-- 2 hadoop supergroup 1170 2016-05-19 16:09 /test/mapred-site.xml -rw-r--r-- 2 hadoop supergroup 1777 2016-05-19 16:09 /test/yarn-site.xml [hadoop@slave1 hadoop-2.7.2]$驗證yarn

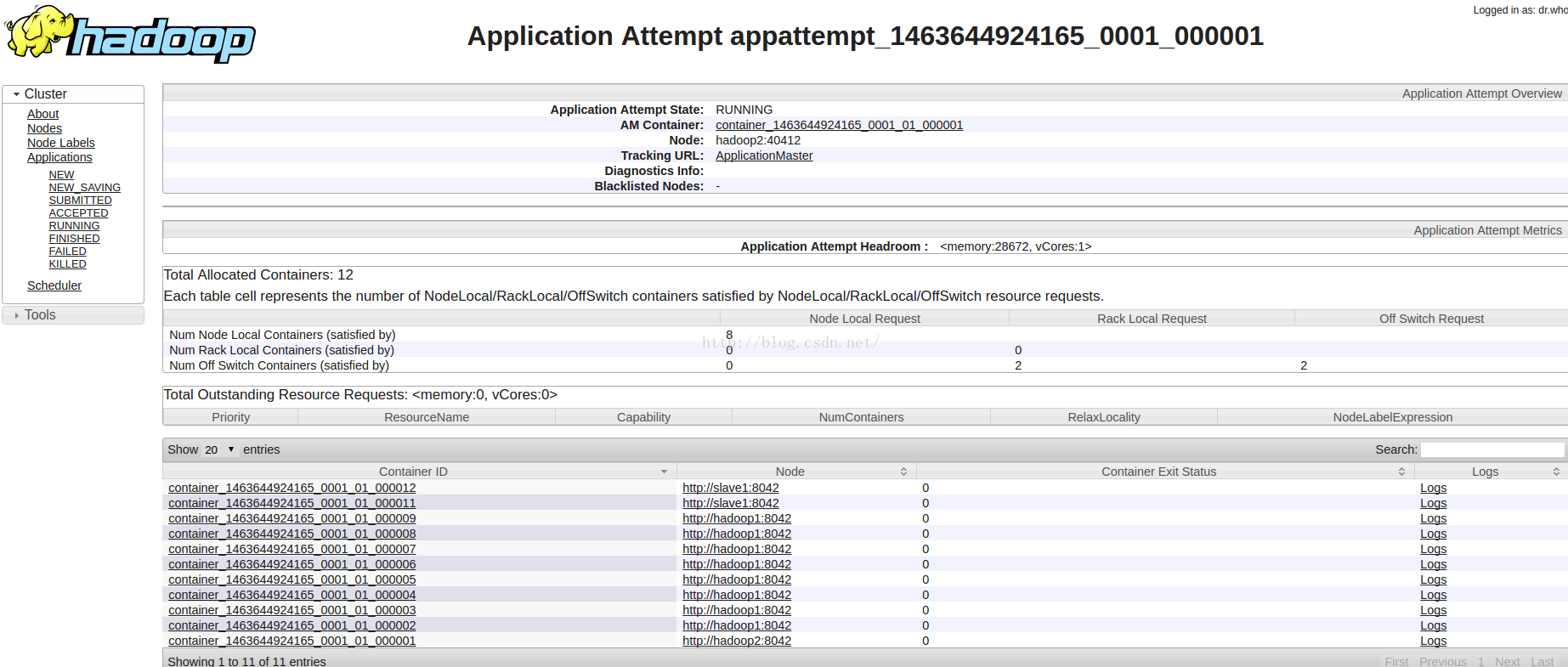

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.2.jar wordcount /test /out 16/05/19 16:15:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/05/19 16:15:26 INFO client.RMProxy: Connecting to ResourceManager at hadoop/192.168.2.3:8032 16/05/19 16:15:27 INFO input.FileInputFormat: Total input paths to process : 9 16/05/19 16:15:27 INFO mapreduce.JobSubmitter: number of splits:9 16/05/19 16:15:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1463644924165_0001 16/05/19 16:15:27 INFO impl.YarnClientImpl: Submitted application application_1463644924165_0001 16/05/19 16:15:27 INFO mapreduce.Job: The url to track the job: http://hadoop:8088/proxy/application_1463644924165_0001/ 16/05/19 16:15:27 INFO mapreduce.Job: Running job: job_1463644924165_0001 16/05/19 16:15:35 INFO mapreduce.Job: Job job_1463644924165_0001 running in uber mode : false 16/05/19 16:15:35 INFO mapreduce.Job: map 0% reduce 0% 16/05/19 16:15:44 INFO mapreduce.Job: map 11% reduce 0% 16/05/19 16:15:59 INFO mapreduce.Job: map 11% reduce 4% 16/05/19 16:16:08 INFO mapreduce.Job: map 22% reduce 4% 16/05/19 16:16:10 INFO mapreduce.Job: map 22% reduce 7% 16/05/19 16:16:22 INFO mapreduce.Job: map 56% reduce 7% 16/05/19 16:16:26 INFO mapreduce.Job: map 100% reduce 67% 16/05/19 16:16:29 INFO mapreduce.Job: map 100% reduce 100% 16/05/19 16:16:29 INFO mapreduce.Job: Job job_1463644924165_0001 completed successfully 16/05/19 16:16:31 INFO mapreduce.Job: Counters: 51 File System Counters FILE: Number of bytes read=25164 FILE: Number of bytes written=1258111 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=32620 HDFS: Number of bytes written=13523 HDFS: Number of read operations=30 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Killed map tasks=2 Launched map tasks=10 Launched reduce tasks=1 Data-local map tasks=8 Rack-local map tasks=2 Total time spent by all maps in occupied slots (ms)=381816 Total time spent by all reduces in occupied slots (ms)=42021 Total time spent by all map tasks (ms)=381816 Total time spent by all reduce tasks (ms)=42021 Total vcore-milliseconds taken by all map tasks=381816 Total vcore-milliseconds taken by all reduce tasks=42021 Total megabyte-milliseconds taken by all map tasks=390979584 Total megabyte-milliseconds taken by all reduce tasks=43029504 Map-Reduce Framework Map input records=963 Map output records=3041 Map output bytes=41311 Map output materialized bytes=25212 Input split bytes=906 Combine input records=3041 Combine output records=1335 Reduce input groups=673 Reduce shuffle bytes=25212 Reduce input records=1335 Reduce output records=673 Spilled Records=2670 Shuffled Maps =9 Failed Shuffles=0 Merged Map outputs=9 GC time elapsed (ms)=43432 CPU time spent (ms)=30760 Physical memory (bytes) snapshot=1813704704 Virtual memory (bytes) snapshot=8836780032 Total committed heap usage (bytes)=1722810368 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=31714 File Output Format Counters Bytes Written=13523http://hadoop:8088/查看

結果

[hadoop@slave1 hadoop-2.7.2]$ bin/hdfs dfs -lsr /out lsr: DEPRECATED: Please use 'ls -R' instead. 16/05/19 16:22:14 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable -rw-r--r-- 2 hadoop supergroup 0 2016-05-19 16:16 /out/_SUCCESS -rw-r--r-- 2 hadoop supergroup 13523 2016-05-19 16:16 /out/part-r-00000 [hadoop@slave1 hadoop-2.7.2]$

當前情況在網頁查看hadoop1和slave1為Active狀態,

那把這兩個namenode關閉,再查看

[hadoop@hadoop1 hadoop-2.7.2]$ jps 10665 DFSZKFailoverController 9551 NameNode 12166 Jps 9822 DataNode 9423 JournalNode 9039 QuorumPeerMain 10483 NodeManager [hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemon.sh stop namenode stopping namenode [hadoop@hadoop1 hadoop-2.7.2]$ jps 10665 DFSZKFailoverController 9822 DataNode 9423 JournalNode 12221 Jps 9039 QuorumPeerMain 10483 NodeManager

[hadoop@slave1 hadoop-2.7.2]$ sbin/hadoop-daemon.sh stop namenode stopping namenode [hadoop@slave1 hadoop-2.7.2]$ jps 3706 DataNode 3374 JournalNode 4121 NodeManager 5460 Jps 4324 DFSZKFailoverController 3184 QuorumPeerMain

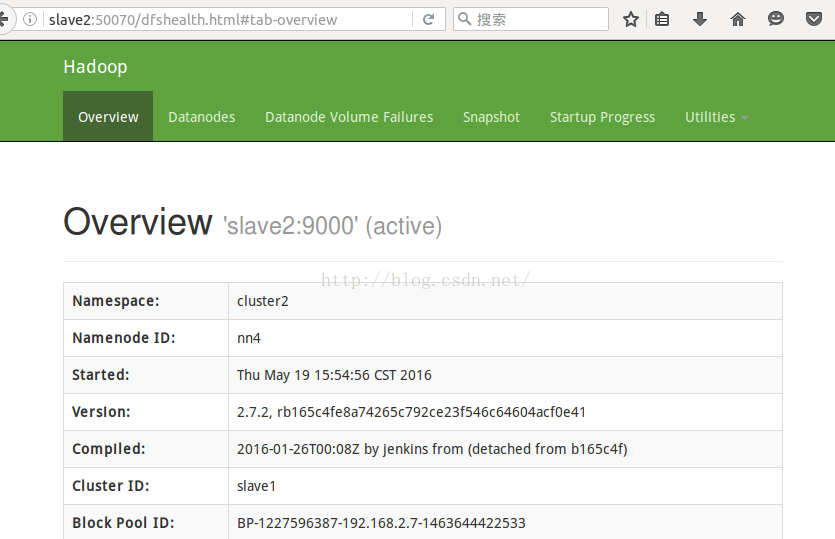

此時Active NN已經分別轉移到hadoop2和slave2上了

以上是hadoop2.2.0的HDFS集群HA配置和自動切換、HDFS federation配置、Yarn配置的基本過程,其中大家可以添加其他配置,zookeeper和journalnode也不一定所有節點都啟動,只要是奇數個就ok,如果集群數量多,這些及節點均可以單獨配置在一個host上